Project Goals

Activities and Results

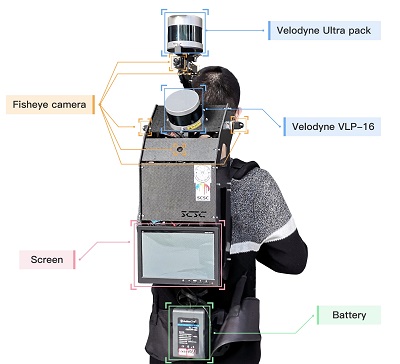

Figure 1. The XBeibao System with Smartphone attached on top

- Indoor LiDAR-based SLAM dataset (Download)

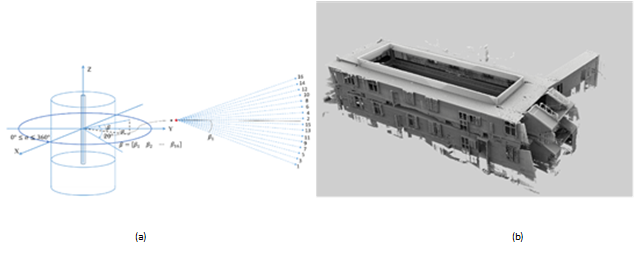

We collected indoor point clouds dataset in three multi-floor buildings with the upgraded XBeibao. This dataset represents the typical indoor building complexity. We provide raw data of one indoor scene with ground truth for users’ evaluation. We also provide raw data of two scenes for evaluation by submitting. The evaluation criteria encompass the error to the ground truth point cloud acquired with a millimeter-level accuracy terrestrial laser scanner (TLS) (Figure 2(b)).

- BIM feature extraction dataset (Download)

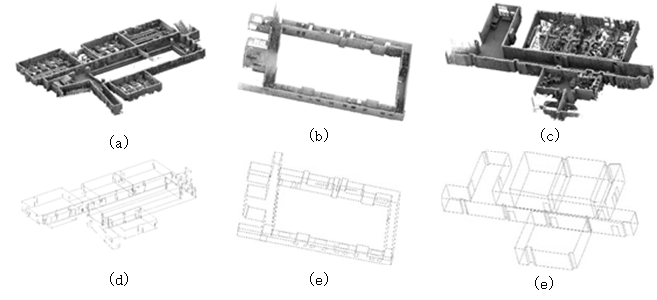

We established three data for evaluating the BIM feature extraction on indoor 3D point clouds. Ground truth data was manually built, and examples are presented in Figure 3.

- Indoor positioning dataset (Download)

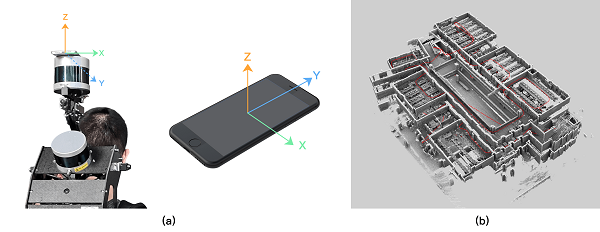

We provide two data sequences with ground truth for users’ evaluation. We also provide three data sequences for evaluation by submitting results. The evaluation criteria encompass the error to the centimeter-level accuracy platform trajectory from the SLAM processing (Figure 4).

Figure 2. Illustration of indoor LiDAR-based SLAM dataset

(a) multi-beam laser scanning data (b) the TLS reference point cloud

Figure 3. Illustration of BIM feature extraction dataset

(a-c) indoor point clouds (d-f) the corresponding BIM frame features

Figure 4. Illustration of smartphone-based indoor positioning dataset

(a)Setup of the attached smartphone (b)SLAM platform trajectory as synchronize reference

Project Investigators

Dr. Cheng Wang (Principal Investigator)

Xiamen University, China

Dr. Naser Elsheimy (Co-Investigator)

University of Calgary, Canada

Dr. Chenglu Wen (Co-Investigator)

Xiamen University, China

Dr. Guenther Retscher (Co-Investigator)

Vienna University of Technology, Austria

Dr. Zhizhong Kang (Co-Investigator)

China University of Geosciences, Beijing

Dr. Andrea Lingua (Co-Investigator)

Polytechnic University of Turin, Italy